A common task for most system services is to export a set of actions that do something. Until now, this meant loads of manual boilerplate code, error-prone request serialization, not quite consistent C++ interfaces, and lots of subtle (and blatant…) bugs. So I set out to find an RPC framework for use in kush-os to handle all of this for me.

Background

What I wanted was simple: the most bare-bones framework that allowed me to have an object Foo in one task, with some method doSomething(), which I could invoke transparently from another task. The keyword there being transparent: I did not want to have to write a single line of serialization or IO code, or even think about the fact that the call was happening in another process1. As far as the programmer is concerned, these objects exist in their address space and work the same as any other C++ object.

While it’s tempting to skip down to the implementation section to read about my take on RPC (and I wouldn’t blame you) the discussion on existing solutions and why they didn’t work for me is worth it to underscore the following piece of wisdom: you probably don’t want (or need) to write your own RPC framework.

Typically, this is done by defining the interface you wish to export – through some sort of interface description language (IDL) – and using tooling to convert that IDL into code for the client and server ends of the connection. The IDL compiler, in effect, generates all of the tedious boilerplate code, meaning you need to get it right once – when you’re writing the RPC library and compiler – and never worry about it again. And even if there turns out to be a bug, you can likewise fix it once and simply regenerate all interfaces to get the fixes.

Because I am a Great Programmer™ (or lazy, depending on how you look at it,) I set out to find an existing framework that I could adapt for use in kush-os going forward.

Existing Solutions

There are quite a few RPC frameworks out there. And as Wikipedia, quite correctly, puts it: “Many different (often incompatible) technologies have been used to implement the concept.”

gRPC

While I’ve never worked with gRPC myself, I knew of a lot of folks who have, as Google APIs seem to use this a lot. It provided all the features I was after and also used Protocol Buffers for serialization which I also had a decent bit of experience with, and was considering porting to the system to use for serialization anyhow.

What stopped me from looking too much further into gRPC as an option was realizing that it required HTTP/2 as the underlying transport. In the system, my primary use case for RPC transports are message ports and shared memory; two channels with negligible overhead compared to a network connection. So all of this extra protocol overhead, even if it is a binary protocol, meant added complexity that essentially every single task in the system would have to contend with, and with it, ostensibly making it more difficult to reduce or eliminate copies of message data.

Cap’n Proto

I already knew of Cap’n Proto since I had used it in a few projects previously as part of storage formats, network protocols, and even file formats. Developed by the same guy as was behind version 2 of Protobufs, it improved on a lot of (apparently common) issues with that version of Protobufs, and of course years of experience from seeing its wide adoption. What Cap’n Proto had going for it, in addition, was excellent CMake integration, and a much lower runtime code requirement: a must for something that will become an integral part of the system. Combined with essentially free (de)serialization (as there is no explicit transformation step: the data in memory is the data sent over the wire!) I am a big fan of Cap’n Proto.

What I had never played around with was its built-in RPC mechanism: essentially exactly what I was after, plus it had a “time travel” feature that amounted to clever use of promises to enable pipelining of method calls. All of this is enabled by the lower-level kj library that Cap’n Proto is built on, part of which is the extensive async IO support relied on by this RPC mechanism.

And that’s exactly where the problem was: there were thousands of lines of supporting async IO code that expected dozens of syscalls and library functions I didn’t have, and the system didn’t even support at the moment. On top of that, it implemented coroutine-style multiprocessing which required further libc support (think setjmp/swapcontext and friends) that I would have had to implement.

While these improvements definitely would have made the system a lot easier to target for porting software, and I no doubt would have enjoyed being able to use a mature, proven RPC framework, I decided against spending weeks building out all these APIs – I was not interested in building a UNIX clone at the start of this project, and that has not changed – or to implementing my versions thereof, and diving into the internals of the kj framework to add support for them, making maintaining my fork of the library even more of a pain than it already is to fork a library for minor tweaks to a custom obscure hobby OS.

And for what it’s worth, the next time I’ve got a little bit more to work with than an entire OS I’ve written from the ground up, I’ll be sure to give its RPC capabilities a whirl.

Others

Those were the two major options I explored. Several other generic RPC frameworks didn’t fit the bill for one reason or another – whether it was their license, dependencies, awkwardness to use, or general lack of documentation. But what I ended up finding were loads of proprietary frameworks or references to using the (somewhat undocumented) local RPC facilities used by Windows2.

Honorable mention goes to a rather overlooked (and apparently, nonexistent, as far as documentation is concerned) part of Apple’s XNU/Mach kernel: MiG, or Mach Interface Generator, the IDL compiler used on macOS and friends. Even though the relevant portions of code are available as open-source, they haven’t really been touched in decades and are a mess of printfs and Mach-isms, but the general idea of what I’m trying to accomplish is the same.

From the title of the post, you’ve probably already gathered that this journey ended up in me rolling my own RPC framework, the very thing I had hoped to avoid by actually doing a little bit of research before I simply jumped in at the deep end writing code with no clear path. At least now I had a pretty good idea of what I was getting myself into.

Baby’s First RPC Framework

It’s probably important to preface this with an important disclaimer that I really have no idea what I’m doing and threw this framework together over two sleepless days and nights with entirely too much help from some funny lettuce. That being said… it works remarkably well so I can’t possibly be too far off from how this is supposed to work.

As most of the magic in the way I wanted the RPC framework to work hinged on lots and lots of fun autogenerated code, the majority of the time was spent on the very creatively named idlc… which compiles IDLs. (Bet that was a tough one to guess!) Beyond that, there is a small bit of runtime support provided by the existing RPC library, namely to implement the actual send/receive semantics over the unidirectional message ports.

Design Overview

Going into this adventure with a fair bit of research already done on existing systems, I had a rough idea of how an RPC framework should look. It’s nothing particularly revolutionary to anyone who’s used a REST API: the request is serialized, sent over an abstract channel, deserialized, and the process is repeated to send the reply, but in the opposite direction from server to client.

In my implementation, the method calls are split up into two parts: the request, which contains the actual arguments passed to the function; and an optional reply, which contains any values returned from the method. Making the reply optional allows for the concept of asynchronous messages, which do not wait for a response from the remote end before returning. And since the reply can essentially be an empty set, functions don’t need to be asynchronous just by not returning anything.

Early on I decided to go with Cap’n Proto as the wire format for messages. I had lots of experience with it, and the zero overhead serialization appealed to me as it would allow me to (eventually…) implement zero-copy RPC where messages are built in place in shared memory regions, and the kernel only needs to notify the remote end that something happened, rather than copying the entire message as well. This was the defining reason I chose it over the messagepack library I already ported to the system.

Plus, thanks to the “lite” version of the Cap’n Proto library, the binary size overhead is only about 100K or so; which will end up being shared among all RPC clients in a task, which will eventually form the backbone of almost every API call, so it’s a negligible overhead, especially when compared to some other, heavier set serialization libraries.

Not rolling my own serialization format also meant that a lot of the “hard” security issues in building an RPC protocol were not something I had to think about too much. There’s of course still plenty of chances for bugs (and I’d be full of shit to claim this framework is utterly devoid of them) but these are essentially limited to my much smaller codebase, and potential misuse of the Cap’n Proto library, though their design philosophy is to not design APIs such that you can shoot yourself in the foot with them.3

Interface Description

Most of the magic of the RPC framework stems from the way interfaces are described: a plain text file with a simple syntax, where the arguments and return values of all methods are defined: an interface description language. This interface description is then parsed by the IDL compiler, idlc, and used as part of the code generation step to generate the C++ server and client stubs, as well as the Cap’n Proto structs used to encode arguments and return values.

I think the format is relatively self-explanatory. Below is one of the example interfaces included with the IDL compiler for testing purposes:

1

2

3

4

5

6

7

interface Hello {

messageWithFixedId [identifier=0xdeadbeef06969] [pland=delicious] () =|

sayHello(message: String) => (reply: String)

sayHello2(message: String, number: UInt32) => (reply: String)

dataMsg(blob: Blob) =|

poke() => ()

}

Hopefully, the way this works is relatively apparent. As mentioned previously, this framework supports asynchronous methods, where no reply is received, and synchronous methods, which do have a reply, even if it is empty. That is what the =| and => symbols indicate: the former means the method is asynchronous, and will not return anything; the latter indicates the following set is the return value of the method. Supported types are bools, signed and unsigned 8, 16, 32, and 64-bit integers, 32 and 64-bit floating point, strings, and blobs; eventually, custom C++ types will be supported via arbitrary serialization methods.

Methods can have decorators applied, which are key/value pairs used as metadata in the compiler – these are the little [key=value] chunks between the name of a method and its arguments. Currently, only the identifier key is considered by the compiler. Each method has a unique 64-bit identifier that the autogenerated code uses to identify it: by default, these are generated by hashing the name of a method. This allows renaming methods, without breaking existing clients, as long as the arguments don’t change.4

Since the IDL is so simple, I was half tempted to do a shitty hand-written parser with lots of regex and friends. I decided against that, instead defining it as a parsing expression grammar. This was far simpler than expected, thanks to the excellent PEGTL library. This allows defining a PEG in a single header by compositing a bunch of templates. This was far easier than my last time building a grammar – though that was a few years ago in a class I hated with a prof whom I despised in Java, at 8 in the morning…

Various components of the grammar (starts of methods, argument pairs, etc.) trigger actions on an InterfaceBuilder class. This constructs an interface in memory, piece by piece, as its IDL is parsed, eventually resulting in a fully complete interface description that is then passed to the code generator pass.

Code Generation

As I hinted at earlier, there are two distinct stages of code generation: first, the Cap’n Proto structures that encode all messages are generated; followed by the C++ server and client stubs.

Messages

Creating the Cap’n Proto structs is relatively straightforward, not only because the Cap’n Proto syntax is incredibly simple. Every method call gets to structures: one for the request, and one for the reply; unless it’s an asynchronous call, in which case we skip generating the reply struct. Each argument (or return value) is converted into a field on the appropriate struct.

This makes serializing the arguments and return values as simple as constructing one of these messages and invoking the appropriate getters/setters. And because Cap’n Proto can serialize empty messages without complaining, no special treatment for void-returning synchronous functions is needed.

The resulting Cap’n Proto files then need to be run through the Cap’n Proto compiler to generate C++ classes. This is not currently done by the idlc, though I’d like to add this in so it can directly output C++ code for struct serialization.

C++ Stubs

Once the message format has been generated, the compiler starts generating the server and client stubs. These are both relatively similar in the way that they work: both have autogenerated helper methods which are responsible for building up Cap’n Proto messages, prepending the identifier header, and sending them; as well as for decoding received messages.

The main difference is that the server stub has an added “main loop” that listens forever for messages from the IO stream, and requires the actual server to subclass the stub and implement several virtual methods – the actual behavior of the RPC. The generated client stubs can be used directly, however; the public methods on it are the same as those defined in the interface and behave as if they were local. Methods can throw exceptions if the remote connection goes away or the message couldn’t be en/decoded correctly.

Eventually, I’d like to be able to catch any exceptions that were raised during the execution of the remote method on the server, and forward them back to the caller; as well as building messages in place on preallocated buffers, to avoid the several memory copies before they are sent.

IO

As you’ve probably gleaned from the rather abstract language about sending and receiving messages, the RPC stubs themselves don’t actually do any IO. They each instead require an IO stream to be provided at creation time, which provide server and client specific methods:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

class ClientRpcIoStream {

virtual ~ClientRpcIoStream() = default;

/// Send the serialized request to the remote connection

virtual bool sendRequest(const std::span<std::byte> &buf) = 0;

/// Receive a reply from the remote connection

virtual bool receiveReply(std::span<std::byte> &outRxBuf) = 0;

};

class ServerRpcIoStream {

virtual ~ServerRpcIoStream() = default;

/// Pop oldest message from receive queue

virtual bool receive(std::span<std::byte> &outRxBuf, const bool block) = 0;

/// Send a reply to the most recently received message

virtual bool reply(const std::span<std::byte> &buf) = 0;

};

The design of this interface is deliberately simple so that it doesn’t impose any undue constraints on the implementation, allowing for a wide variety of backends to actually transmit the message. Currently, libdriver provides IO streams that are backed by kernel ports and supports resolving port names via the dispensary.5

In the future, I plan to add support for a shared memory-based transport, using a lock-free circular buffer between two tasks. The remote task can then be notified of new work via the work loop thread’s notification bits. This would allow for message pipelining, hopefully increasing the performance of the protocol significantly and allowing us to take full advantage of the extremely low overhead of Cap’n Proto.

Conclusion

Overall, this was a successful little adventure into a weird little part of systems programming that nobody seems to have ever documented… not that this is anything close to documentation. While there is obviously a significant amount of room for improvement in this RPC framework, it seems to work quite well already so it should provide a decent base to build on. However, the code generation step needs to be cleaned up because it’s quite frankly… disgusting.

I’ve started moving all of the drivers, including driverman over to using this new RPC mechanism. The client code is embedded in libdriver for all drivers to communicate with the driver manager, to interact with the device tree, load device drivers, query properties, and other such fun things. It really could not be simpler than including the generated C++ files; and if I decide some methods need a change, I update my IDL, regenerate the stubs, and I’m good to go. I really cannot overstate how huge this is from a productivity standpoint: before I’d easily spend several hours building out bespoke RPC methods.

Nevertheless, there’s much work to be done: for one, Cap’n Proto has support for a generic list type, which would be convenient to map to something like std::vector for function arguments and return values. Likewise, multiple return values aren’t yet supported, and serializing custom types is also not yet supported – how I’ll implement that is unclear, but likely will consist of encode/decode methods that produce/consume blobs.

I hope this was an interesting little look into something more accessible than kernel internals. I’m hoping to write some disk drivers next, so I can start loading stuff from the filesystem, rather than a small, limited initial ramdisk. This will also let me reclaim the memory occupied by it, though I have no idea whether it’s even reserved in the first point, or whether the fact that the loader places it at the very end of physical memory means we’ve been lucky enough not to allocate it away. Hmm… future problems. Lovely.

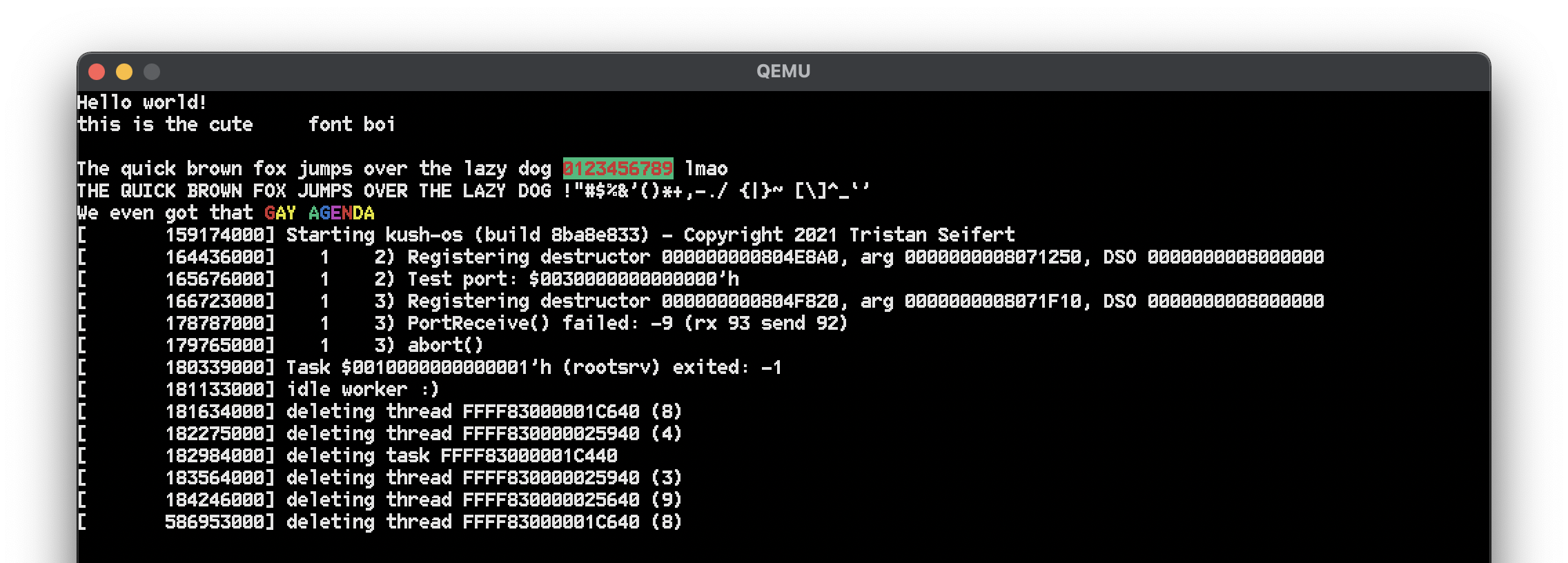

Totally unrelated, but in getting most of these drivers working for amd64 for the first time, I had to parse the bootloader info structure, which included the framebuffer address… I played around a little bit and built a small framebuffer console: it supports basic ANSI escape sequences for foreground and background color, cursor positioning, and screen/line clearing.

The font is based on Robey Pointer’s Bizcat with some minor tweaks. I’m a big fan of its appearance – particularly, the thicker lines – compared to the standard VGA font and Terminus which are about the two only options you really see on framebuffer consoles these days. Though the font used by Sun workstations is pretty neat, too.

The one obvious issue here is how the remote end of the connection going away during a call is reported. Thankfully, in C++, this can be done the same way as any other error: by throwing an exception, albeit of a custom type so that these errors can be handled specially by the caller if desired. What this means is that using RPC implies exception support, but I find that the overhead (mainly in terms of binary size) is worth it for how much simpler it makes code. ↩

This API, known as Local Interprocess Communication is part of the (undocumented) native NT API not directly available to applications. While neat, this requires a lot of kernel support and didn’t provide the “free” method call bridging I was after. ↩

These “nice” APIs are something a lot more libraries and systems could do with. A little bit of thought while designing an interface can go huge ways in making it much, much more difficult to abuse in problematic ways: whether those are undefined behavior, destructive actions, bugs, or good old security holes. ↩

In theory, thanks to how structs are encoded by Cap’n Proto, there is a fair amount of forward compatibility we get for free. Arguments can always be added or renamed without causing issues. ↩

Because it dispenses ports… that’s the only reason why it’s named that. It’s also the one remaining RPC interface that’s a) implemented in straight C, and b) uses plain structs with manual serialization code. ↩